You have a great Jupyter notebook you’ve been working on. If only you could share it with the world: here are some options for getting your notebook online.

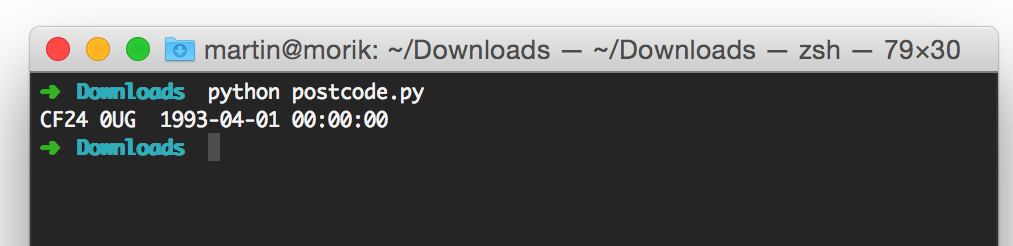

If you just want to show a notebook to people without them running the code, nbviewer does the job by showing the cells and their output (beware long dataframes that won’t be cropped). Just put the notebook file (.ipynb) on github and supply the link to nbviewer. If your visitor likes what they see, they can immediately launch a functioning version via a Binder link, or download the .ipynb file. Here’s a simple example of what the user sees.

If your notebook is in a github repo you can skip nbviewer and build a working version of the notebook via https://mybinder.org/. Just supply the repository url and it will serve up all the .ipynb files, with the notebook cells ready to run.

jupyter{book} lets you build a complete book using notebook elements. Here’s an example with some notebooks.

The Voilà package “turns Jupyter notebooks into standalone web applications” or if you prefer, it puts only the cell output on the webpage. Where it gets really useful is by involving widgets from ipywidget to allow user interaction.

A github repo with Github Pages enabled can run as a webpage using a package called nbinteract but I’ve found it has trouble loading widgets, as seen in some of the tutorial pages.

Of course, Jupyter notebooks are not the only option: Kaggle, Google Colab and many more. There’s an episode of the podcast Talk Python To Me about a paper that reviewed 60 (!) different notebooks.